[Solved-3 Solutions] How to use Pig in Python ?

What is pig ?

- Apache Pig is a high level data flow platform for execution Map Reduce programs of Hadoop. The language for Pig is pig Latin.

- The Pig scripts get internally converted to Map Reduce jobs and get executed on data stored in HDFS. Every task which can be achieved using PIG can also be achieved using java used in Map reduce.

Python

- Python is an object-oriented, high level language, interpreted, dynamic and multipurpose programming language.

- Python is easy to learn yet powerful and versatile scripting language which makes it attractive for Application Development.

- Python's syntax and dynamic typing with its interpreted nature, make it an ideal language for scripting and rapid application development in many areas.

Problem :

How to use pig in python ?

Solution 1:

- Another option to use Python with Hadoop is PyCascading. Instead of writing only the UDFs in Python/Jython, or using streaming, we can put the whole job together in Python, using Python functions as "UDFs" in the same script as where the data processing pipeline is defined.

- Jython is used as the Python interpreter, and the MapReduce framework for the stream operations is Cascading. The joins, groupings, etc.

Here is an example

@map(produces=['word'])

def split_words(tuple):

# This is called for each line of text

for word in tuple.get(1).split():

yield [word]

def main():

flow = Flow()

input = flow.source(Hfs(TextLine(), 'input.txt'))

output = flow.tsv_sink('output')

# This is the processing pipeline

input | split_words | GroupBy('word') | Count() | output

flow.run()

Solution 2:

- When we use streaming in pig, it doesn't matter what language we use... all it is doing is executing a command in a shell .

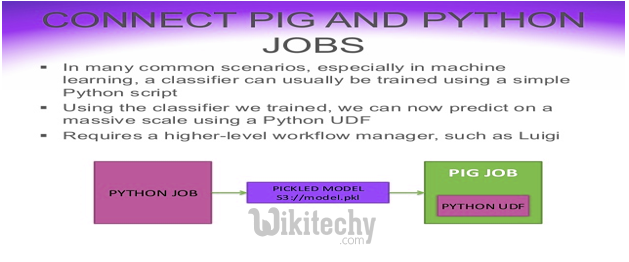

- We can use Python. We can now define Pig UDFs in Python natively . These UDFs will be called via Jython when they are being executed.

Using pig and python

Solution 3:

- On a recent project, we used Python UDFs and occasionally had issues with Floats vs. Doubles mismatches, so be warned.

- Our impression is that the support for Python UDFs may not be as solid as the support for Java UDFs, but overall, it works fine.