sqoop - sqoop1 vs sqoop2 - apache sqoop - sqoop tutorial - sqoop hadoop

sqoop1 vs sqoop2 - Service Level Integration

- Service Level IntegrationHive, HBase

- Require local installation

- Oozie – von Neumann(esque) integration:

- Package Sqoop as an action

- Then run Sqoop from node machines, causing one MR job to be dependent on another MR job

- Error prone, difficult to debug

- Hive, HBase

- Server side integration

- Oozie

- REST API integration

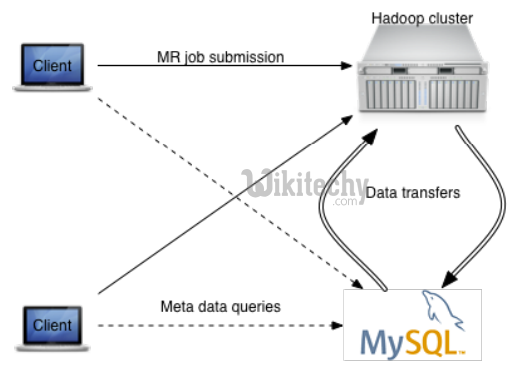

Sqoop1 Architecture :

- CrypAc, contextual command line arguments

- Tight coupling between data transfer and output format

- Security concerns with openly shared credentials

- Not easy to manage installation/Configuration

- Connectors are forced to follow JDBC model

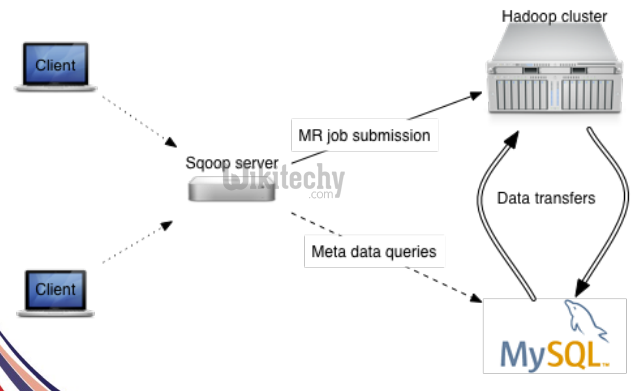

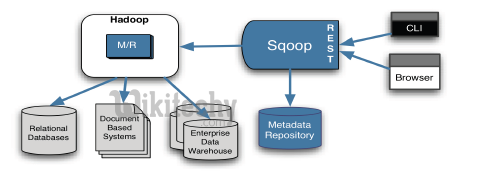

Sqoop2 Architecture :

Sqoop1: Client side Tool

- Connectors are installed/configured locally

- Local requires root privileges

- JDBC drivers are needed locally

- Database connecAvity is needed locally

Sqoop2: Sqoop as a Service - client side tools :

- Connectors are installed/configured in one place

- Managed by administrator and run by operator

- JDBC drivers are needed in one place

- Database connectivity is needed on the server

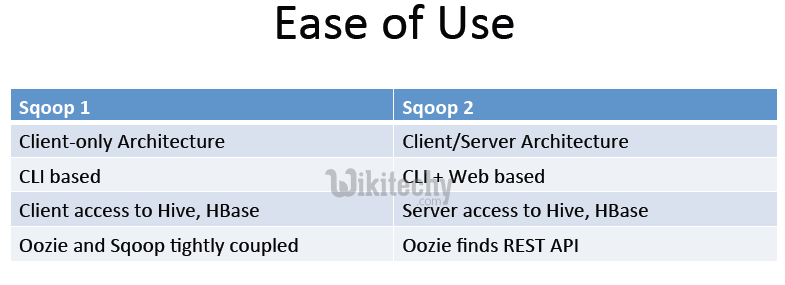

Client Interface

- Command line interface (CLI) based

- Can be automated via scripting

- CLI based (in either interactive or script mode)

- Web based (remotely accessible)

- REST API is exposed for external tool integration

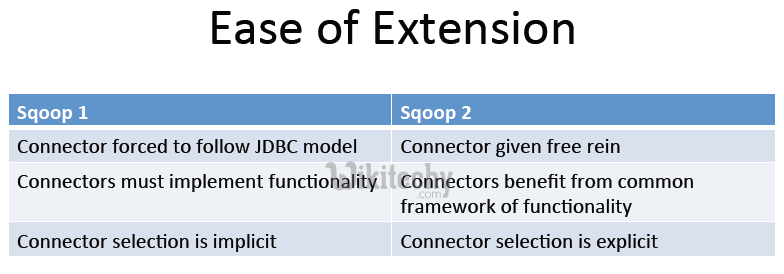

Implementing Connectors :

- Connectors are forced to follow JDBC model

- Connectors are limited/required to use common JDBC vocabulary (URL, database, table, etc)

- Connectors must implement all Sqoop functionality they want to support

- New functionality may not be available for previously implemented connectors

- Require knowledge of database idiosyncrasies

- e.g. Couchbase does not need to specify a table name, which is required, causing -‐table to get overloaded as backfill or dump operation

- e.g. null string representation is not supported by all connectors

- Functionality is limited to what the implicitly chosen connector supports

- Connectors can define own domain

- Connectors are only responsible for data transfer

- Common Reduce phase implements data transformation and system integration

- Connectors can benefit from future development of common functionality

- Less error - prone, more predictable

- Couchbase users need not care that other connectors use tables

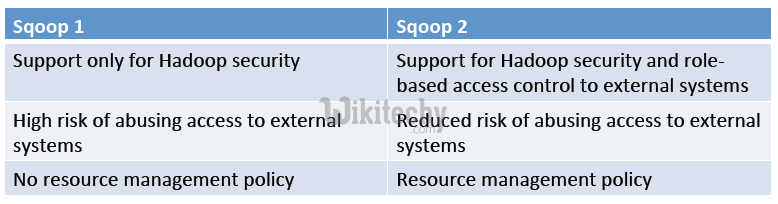

Sqoop2 – Security :

learn sqoop - sqoop tutorial - sqoop2 tutorial - sqoop2 security - sqoop code - sqoop programming - sqoop download - sqoop examples

- Inherit/Propagate Kerberos principal for the jobs it launches

- Access to files on HDFS can be controlled via HDFS security

- Limited support (user/password) for secure access to external systems

- Inherit/Propagate Kerberos principal for the jobs it launches

- Access to files on HDFS can be controlled via HDFS security

- Support for secure access to external systems via role-‐based access to connection objects

- – Administrators create/edit/delete connections

- – Operators use connections

Sqoop2 – External System Access :

- Every invocation requires necessary credentials to access external systems (e.g. relational database)

- Workaround: create a user with limited access in lieu of giving out password

- Does not scale

- Permission granularity is hard to obtain

- Hard to prevent misuse once credentials are given

- Connections are enabled as first-‐class objects

- Connections encompass credentials

- Connections are created once and then used many times for various import/export jobs

- Connections are created by administrator and used by operator

- Safeguard credential access from end users

- Connections can be restricted in scope based on operation (import/export)

- Operators cannot abuse credentials

Sqoop2 – Resource Management :

- No explicit resource management policy

- Users specify the number of map jobs to run

- Cannot throttle load on external systems

- Connections allow specification of resource management policy

- Administrators can limit the total number of physical connections open at one time

- Connections can also be disabled